Every good codebase has automated tests. They allow developers to have confidence in the features and fixes they make. Integration tests help validate the correctness of software between boundaries, one of which includes third-party services that are not under your control. Unfortunately, integration tests can both be flaky and slow.

For example, consider the (contrived) case where we want to write a reliable script that will get number of /r/aww subreddit posts containing either the words 'cat' or 'dog' in the name, and the script to run it and print the output:

Great! While I'm pretty confident that this works, I'm not 100% sure it will always work, so I should write an integration test for it.

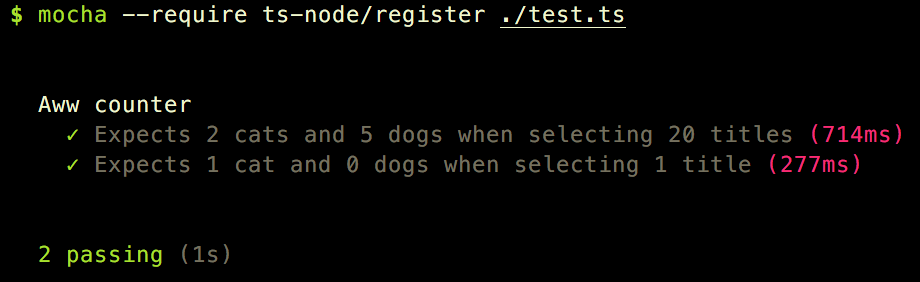

It works - time to merge it, right?

Well, not exactly. There are two problems with this test; one glaring and the other is hidden.

- The glaring problem: Since we don't control the content or responses on reddit.com, the titles in the response can change over time, breaking our once valid tests.

- The hidden problem: The speed of this test is dependent on network conditions. By itself, this test runs in a small amount of time. However, running an entire suite of network based tests will take a long time to complete. If the repo had hundreds of tests, the feedback cycle would be several minutes. Integration test runtime can quickly build up and be a hard problem to solve.

We can kill two birds with one stone through response mocking. A library like nock would serve us just fine, but we would need to manage the mocked API endpoints and responses. Instead, we can use mocha-tape-deck, which manages the mock fixtures based on real API calls. Here's what the test looks like using mocha-tape-deck:

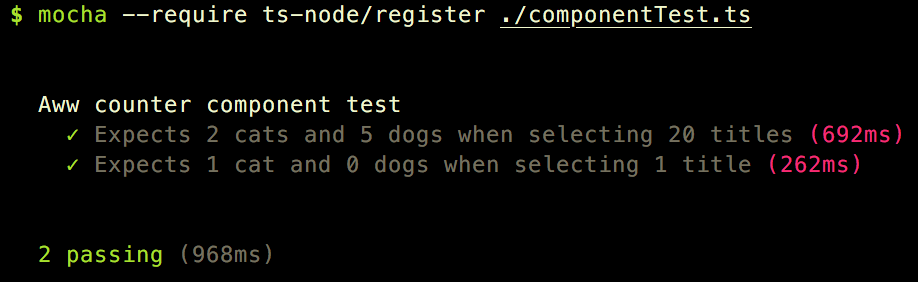

After we run the script once we get a result that seems a bit confusing at first:

The tests took almost the same amount run time so we assume nothing has changed!

This is only for the first run. Mocha-tape-deck uses fixtures saved as .cassette files that are found in the directory provided to the TapeDeck constructor (which is created if it does not exist). If it cannot find any .cassette files, it will generate them by running the test and recording the HTTP requests and responses, storing them as .cassette files in the cassette directory. Upon inspection, we'll find that the ./cassettes directory has two cassette files, which are the full name of the test with .cassette appended. If we ever needed to re-record a test we can delete that particular .cassette file. Additionally, we can save our .cassette files into version control and actually see how our compatibility with the 3rd party APIs has evolved over time.

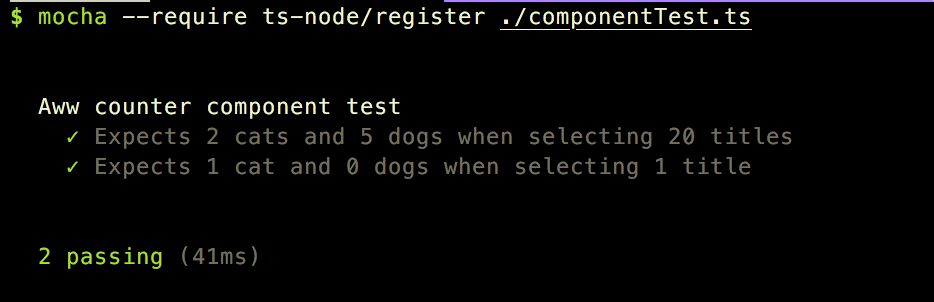

Now that we have our cassettes ready, let's see how the tape deck performs with them loaded.

~ 1000 ms to ~ 40 ms improvement! Thats over 12 orders of magnitude faster.

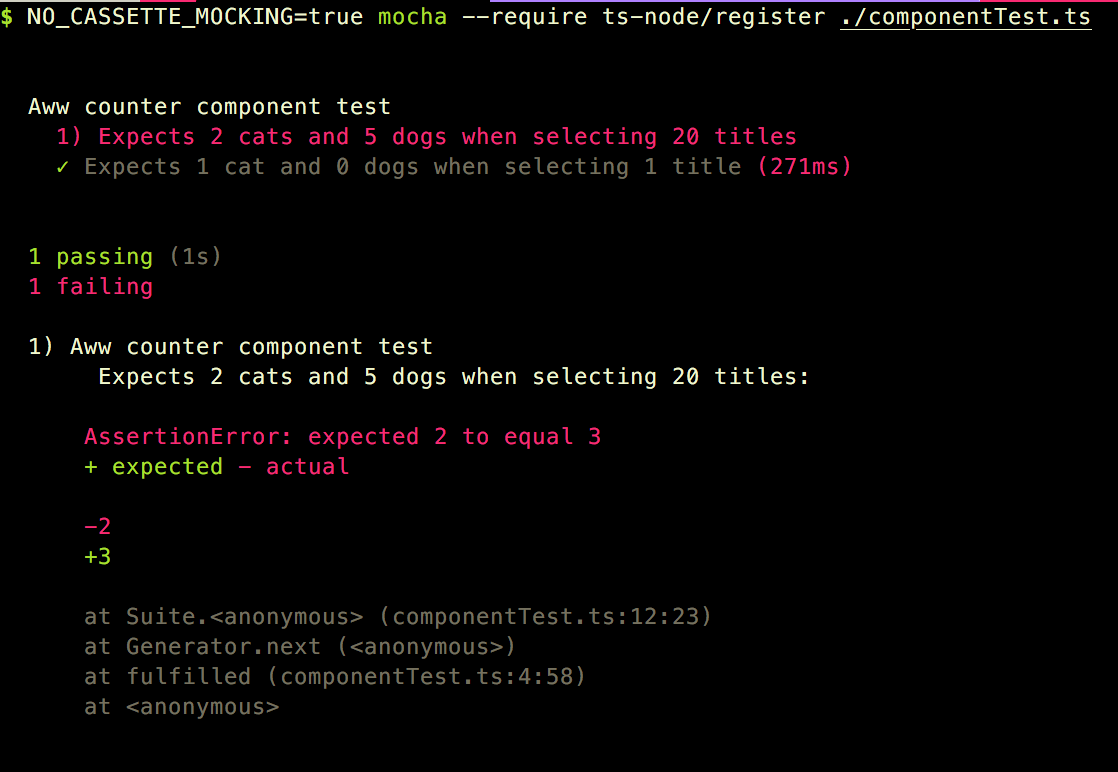

We've verified that mocha-tape-deck can speed up tests. Now let's check repeatability. If the environment variable NO_CASSETTE_MOCKING is set, mocha-tape-deck ignores the cassettes and run the test as it normally would.

This environment variable is the key to ensure your integration tests are fast, reliable, and up to date. If you need to verify that tests are not stale against a live third party service, you can take your mocked integration tests and toggle them into live integration tests with.

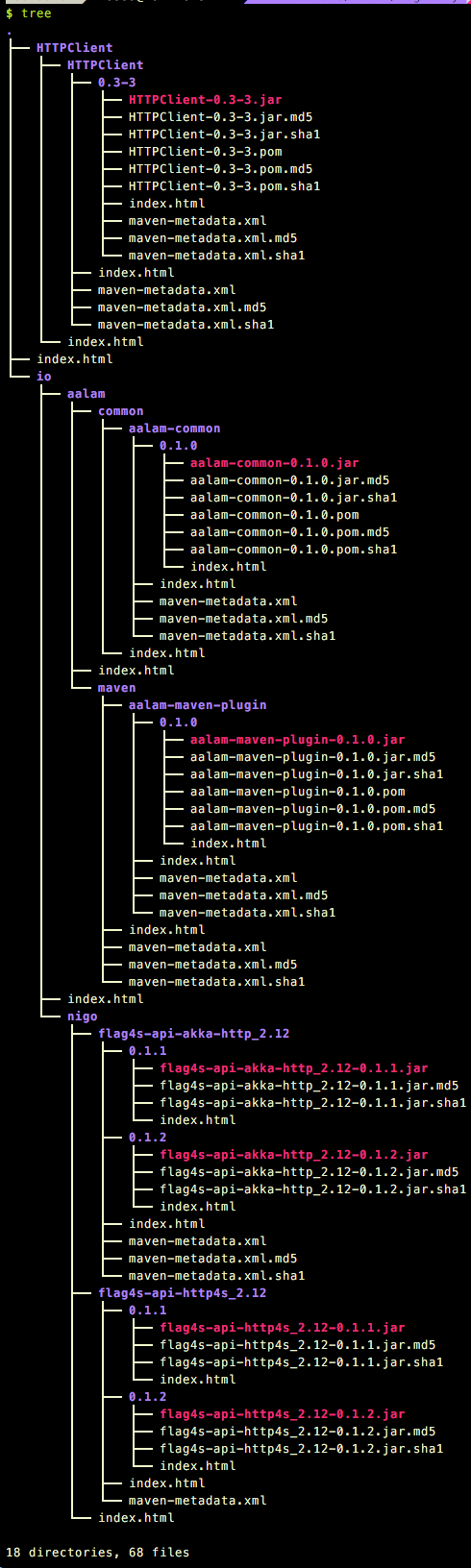

For a less contrived example, let's observe a process that runs under the hood at FOSSA: indexing Maven dependencies by their POM hashes. Maven registries such as Maven Central can be traversed like a filesystem subdirectories with modules, their metadata, and their source. Our first pass implementation at testing the indexer used mocking with nock. While this worked, it is a massive pain to maintain. It required files that perfectly mimicked Maven Central and their POM files. When we found edge cases, it was painful to add those cases to the list.

Just to showcase the pain, here's the file directory tree of the mock fixture:

I dreaded dealing with any new bugs that needed to be covered in the tests because that is an untenable, unmanageable mess of fixtures. Mocha-tape-deck takes the burden of managing these massive fixtures away from the developer.

At the end of the day, mocha-tape-deck is not a one size fit all solution for all tests and all cases. But it certainly keeps a lazy developer happy in many cases, and offers many pros over a run-of-the-mill integration test.

Check it out to see if it's right for you!